Introduction: When verification activity does not create confidence

Many verification organisations recognise the symptoms early. Verification deadlines slip despite sustained effort. Bugs appear late, including in basic use cases. Different teams achieve noticeably different quality levels. New verification engineers take longer than expected to become effective. Similar mistakes recur across programmes.

A lack of verification effort rarely causes these outcomes. They reflect uneven verification capability across teams, projects, and lifecycle stages.

Verification capability benchmarking exists to expose this variation. It provides an objective, organisation-wide view of how verification is planned, executed, measured, and closed, and how consistently those practices are applied.

Why organisations benchmark verification capability

Benchmarking is not about comparing teams competitively. It is about understanding current capabilities to improve future outcomes.

Organisations benchmark verification capability to:

Without structured assessment, verification improvement is often reactive. Changes are made after failures rather than guided by evidence. Benchmarking replaces anecdotal diagnosis with systematic evaluation.

Benchmarking in general

Benchmarking is a structured method for understanding current capability by comparing observed practices against defined reference models. In engineering disciplines, benchmarking is used to establish baselines, identify gaps, and prioritise improvement actions based on evidence rather than perception.

In verification, benchmarking is most effective when it focuses on how work is actually performed across teams and projects, rather than on documented processes alone. This allows organisations to distinguish between isolated execution issues and systemic capability limitations.

Verification capability benchmarking applies these general principles in a verification-specific context, enabling objective assessment, comparison across programmes, and continuous improvement.

What verification capability benchmarking measures

Verification capability benchmarking evaluates how verification is actually performed, not how it is described in methodology documents or process guidelines. The assessment focuses on observable behaviour, decision-making discipline, and evidence produced during real verification work.

It assesses three tightly coupled dimensions:

For organisations looking to examine their verification practices using a structured, evidence-based approach, Alpinum’s Verification Capability Benchmarking Service provides a practical framework for assessment and comparison. This approach draws on DV-CMM principles specifically adapted for functional verification, rather than on general-purpose software process maturity models.

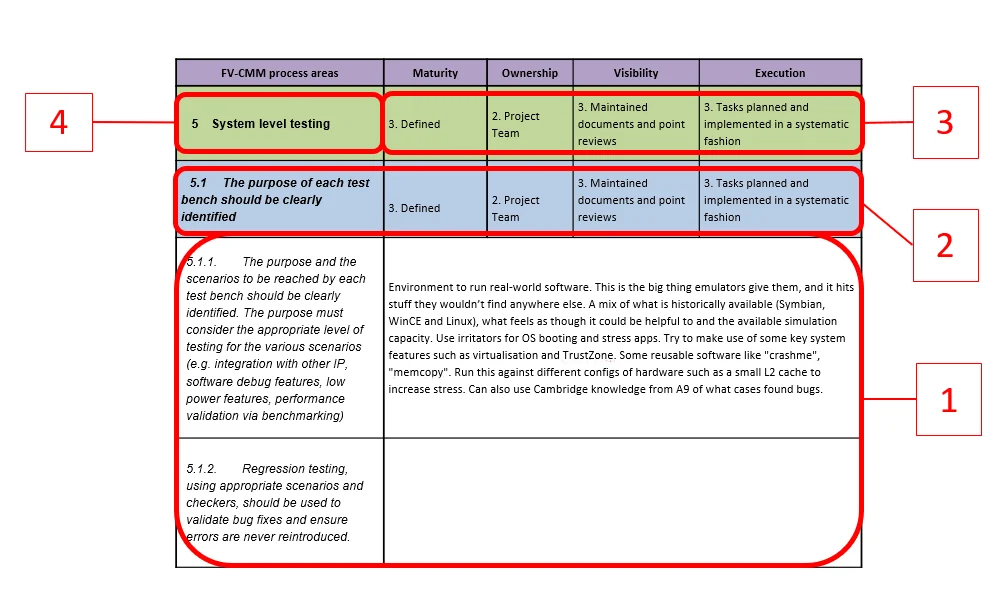

Figure 1: Verification Capability Benchmarking Framework

Figure 1 illustrates how verification capability is assessed across an organisation by linking defined process areas with explicit maturity, ownership, visibility, and execution criteria. Instead of viewing verification as a collection of independent activities, the framework makes explicit how system-level intent is translated into concrete verification work and examined using bottom-up evidence from real projects.

How the framework is applied in practice

Figure 1 highlights four aspects that are assessed together to establish verification capability across an organisation:

- Defined process areas

Each verification activity is evaluated within a clearly defined process area, such as specification intent, system-level testing, regression strategy, or closure discipline. This ensures assessment reflects how verification work is actually structured, rather than how it is described in abstract methodologies. - Maturity of execution

Maturity captures how repeatable, controlled, and measurable each activity is. This includes whether practices are ad hoc, defined, consistently applied, or systematically measured and improved over time. - Ownership and visibility

Benchmarking examines who owns each verification activity and how progress and quality are made visible. Clear ownership and documented review points are critical to ensuring verification intent is not lost as programmes scale. - Evidence-based execution

Execution is evaluated using bottom-up evidence from real projects, including test results, regressions, coverage data, and review artefacts. This ensures capability is assessed based on observable behaviour rather than reported compliance.

Together, these four elements allow verification capability to be assessed consistently across teams, projects, and lifecycle stages.

Verification capability maturity in practice

While Figure 1 defines how verification capability is assessed, maturity becomes evident through the evolution of ownership, visibility, and execution in practice. Benchmarking makes these characteristics explicit by examining how verification activities progress from informal execution to disciplined, organisation-wide practice.

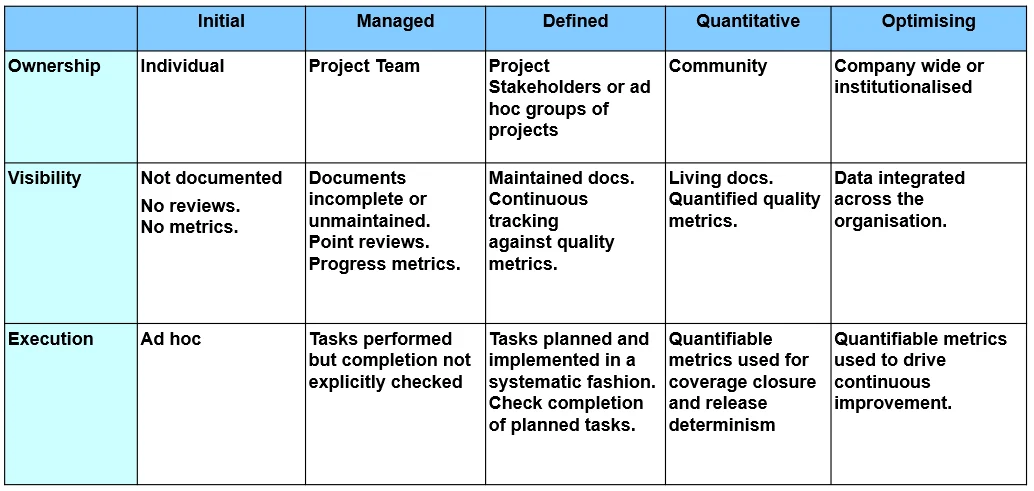

Table 1: Verification Capability Maturity Characteristics

This table illustrates how verification capability typically evolves across ownership, visibility, and execution. Early stages are characterised by individual ownership, limited documentation, and ad hoc execution. As maturity increases, responsibility broadens, visibility improves through maintained artefacts and metrics, and execution becomes systematic and measurable. At higher maturity levels, verification data is integrated across the organisation and used to drive continuous improvement.

Benchmarking does not assume that all verification activities must operate at the highest maturity level. Instead, it exposes misalignment between ownership, visibility, and execution. For example, where tasks are performed systematically but evidence is not reviewed, or where metrics exist without clear accountability. These misalignments are a common source of late-stage risk, inconsistent quality, and reduced confidence in verification closure decisions.

When assessment follows this structure, gaps tend to emerge during normal review rather than through special analysis. Variations between teams, projects, and lifecycle stages become visible when practices are consistently examined, not because additional metrics are introduced. This approach supports benchmarking that is comparable across the organisation and grounded in how verification is actually performed, while providing a shared language for discussing verification performance, risk, and improvement priorities.

Process areas across the verification lifecycle

Benchmarking evaluates verification capability across defined process areas rather than isolated activities. These typically include:

Assessing these areas collectively reveals gaps that remain invisible when teams focus solely on execution metrics.

Top-down intent and bottom-up evidence

Adequate verification depends on alignment between the system’s intent and the evidence produced.

Benchmarking evaluates:

When this alignment is weak, verification completion becomes an activity milestone rather than a risk-reduction milestone.

Organisational capability and scaling effects

Verification capability is shaped as much by organisational factors as by technical ones. In practice, organisational effects become visible when verification capability is examined across multiple process areas rather than through individual metrics or activity counts.

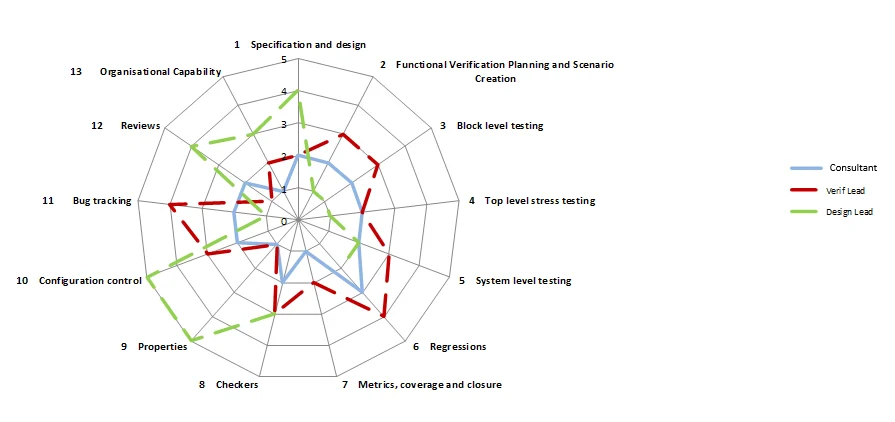

Figure 2: Example verification capability profile across organisational process areas

Example radar view illustrating how verification capability can vary across workflow and organisational process areas when assessed consistently across the lifecycle.

Such views highlight that verification capability does not scale uniformly as organisations grow. Some process areas mature quickly, while others remain dependent on individual experience or local practice. These imbalances often correlate with organisational factors such as unclear ownership, inconsistent review discipline, or uneven training and ramp-up, rather than with tool availability or effort alone.

Benchmarking, therefore, evaluates:

As organisations scale, informal practices no longer provide sufficient control. Benchmarking enables identifying where process definition and governance must evolve to support growth without compromising verification quality.

AI adoption within verification capability

AI-based techniques are increasingly used within verification, from stimulus generation to results analysis. Benchmarking does not assume that AI adoption automatically improves capability.

Instead, it evaluates:

Capability maturity requires that AI enhance the quality of evidence, not just throughput.

From benchmarking to improvement

The outcome of verification capability benchmarking is not a score. It is a structured improvement roadmap. Benchmarking enables organisations to define priorities, track progress, and validate that process changes lead to improved verification outcomes rather than additional overhead.

Continue Exploring

If you would like to explore more work in this area, see the related articles in the Verification section on the Alpinum website:

👉 https://alpinumconsulting.com/resources/blogs/verification/

For discussion, collaboration, or technical engagement, contact Alpinum Consulting here:

👉 https://alpinumconsulting.com/contact-us/

Written by : Mike Bartley

Mike started in software testing in 1988 after completing a PhD in Math, moving to semiconductor Design Verification (DV) in 1994, verifying designs (on Silicon and FPGA) going into commercial and safety-related sectors such as mobile phones, automotive, comms, cloud/data servers, and Artificial Intelligence. Mike built and managed state-of-the-art DV teams inside several companies, specialising in CPU verification.

Mike founded and grew a DV services company to 450+ engineers globally, successfully delivering services and solutions to over 50+ clients.

Mike started Alpinum in April 2025 to deliver a range of start-of-the art industry solutions:

Alpinum AI provides tools and automations using Artificial Intelligence to help companies reduce development costs (by up to 90%!) Alpinum Services provides RTL to GDS VLSI services from nearshore and offshore centres in Vietnam, India, Egypt, Eastern Europe, Mexico and Costa Rica. Alpinum Consulting also provides strategic board level consultancy services, helping companies to grow. Alpinum training department provides self-paced, fully online training in System Verilog, UVM Introduction and Advanced, Formal Verification, DV methodologies for SV, UVM, VHDL and OSVVM and CPU/RISC-V. Alpinum Events organises a number of free-to-attend industry events

You can contact Mike (mike@alpinumconsulting.com or +44 7796 307958) or book a meeting with Mike using Calendly (https://calendly.com/mike-alpinumconsulting).

Stay Informed and Stay Ahead

Latest Articles, Guides and News

Explore related insights from Alpinum that dive deeper into design verification challenges, practical solutions, and expert perspectives from across the global engineering landscape.