Large engineering programmes rarely fail due to neglected verification effort. Most programmes apply substantial verification effort, deploy mature tooling, and draw on experienced teams. Verification teams improve metrics, stabilise regressions, and deliver planned milestones. Yet late in the programme, uncertainty often increases rather than decreases. Decisions become more cautious, contingency grows, and confidence erodes precisely when it is most needed.

This pattern is not new, but it has become more pronounced as systems scale. Modern programmes integrate heterogeneous compute, complex interconnect, software-defined behaviour, safety and security requirements, and multiple suppliers under sustained delivery pressure. In this environment, verification activity can be extensive and technically competent, while programme-level risk continues to accumulate.

Understanding why this occurs requires a shift in perspective. System-level risk depends less on verification execution quality and more on structural programme factors. Programme risk arises from embedded assumptions, architectural limitations on observability, and decisions made under delivery pressure.

Risk Rarely Appears Where Developers Expect It

Programmes typically anticipate risk in visibly challenging areas: new IP, aggressive performance targets, or technologies with limited precedent. Verification effort naturally follows these expectations, focusing on components and behaviours already recognised as challenging. In doing so, developers build confidence around the areas they believe are most likely to fail, reinforced by progress metrics that show steady closure against known risks.

In practice, many late-stage failures originate outside this focus. They emerge from interactions between well-understood components, from combinations of operating modes that were individually verified but rarely exercised together, or from behaviours assumed to be benign under normal conditions.

Traditional progress indicators often fail to expose these risks, not because verification is incomplete, but because the underlying assumptions shaping where attention is applied remain unchallenged. By the time such issues surface, architectural flexibility is limited, and recovery options are constrained, making the risk appear sudden despite having been present throughout the programme.

System Complexity Is Growing

Modern programmes have not simply grown in scale; they have shifted where complexity resides. Individual components are often well understood, and teams verify them competently in isolation. The dominant sources of uncertainty instead emerge from interaction across domains: functional behaviour coupled with power intent, security state, software control, and operating mode. These interactions evolve across transitions and under conditions that teams rarely exercise together during normal verification closure.

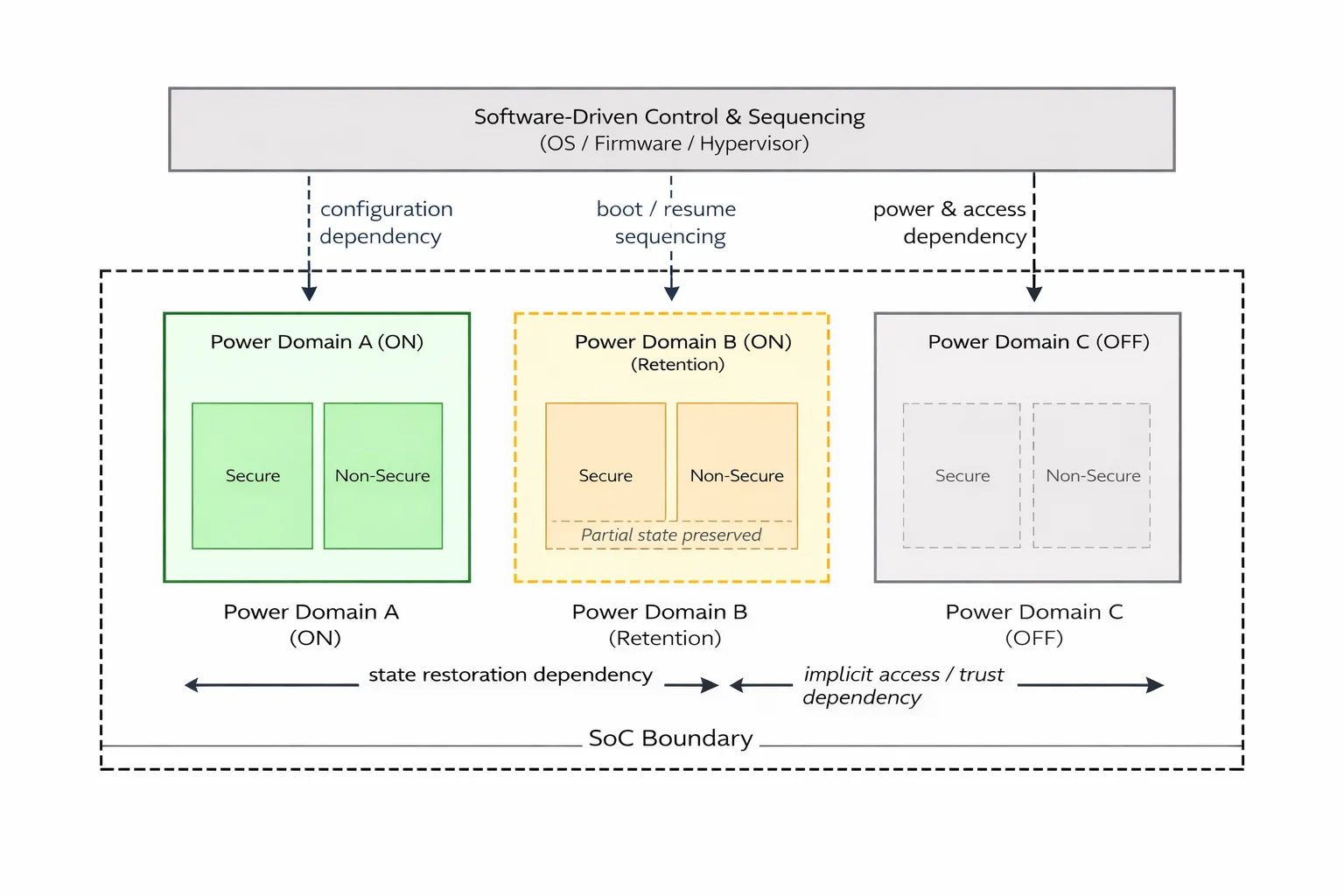

Interconnect coherency, shared resources, power sequencing, privilege boundaries, and software-defined configuration introduce behaviours that teams cannot reason about locally. Power state changes alter functional availability, security modes constrain access paths, and software orchestration binds these concerns together at runtime. While each subsystem may appear robust within its own verification scope, overall system behaviour depends on assumptions that span multiple teams, suppliers, and ownership boundaries. Risk therefore accumulates at these boundaries rather than within individual blocks.

At the system scale, this risk arises primarily from interaction across shared infrastructure, not from the behaviour of individual components verified in isolation. Figure 1 illustrates how multiple functional agents interact through shared interconnect and memory resources at the system scale. While each processor or accelerator may behave correctly within its own verification scope, shared infrastructure introduces contention, ordering, and dependency effects that only emerge through interaction and drive system-level risk.

Figure 1: System-level interaction across shared interconnect and memory resources in a heterogeneous SoC. Source: ResearchGate

Why Tools and Methodologies Alone Cannot Control Programme Risk

At the programme scale, however, risk depends less on how much verification teams perform and more on how leaders interpret and act on the resulting evidence. Tools generate results within the scope of the assumptions they are configured to validate. When those assumptions remain incomplete, outdated, or misaligned with system behaviour, verification progress can appear reassuring while exposure persists. The limitation lies not in tool capability, but in decision confidence: the ability to recognise which behaviours remain unexercised, which interactions cannot be observed directly, and where available evidence cannot support irreversible programme commitments.

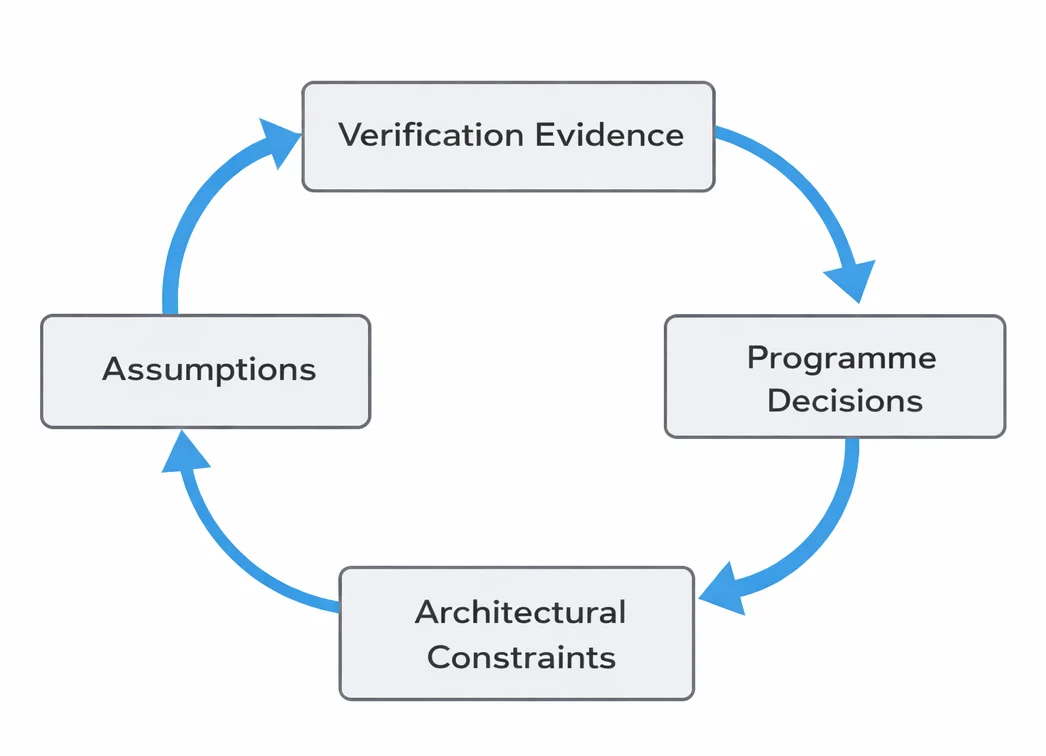

Programmes, therefore, build confidence through repeated interpretation and reassessment of verification evidence, rather than through linear progression towards sign-off. Evidence informs decisions, decisions reshape assumptions, and assumptions determine what evidence teams seek next. Confidence emerges through this iterative cycle, not through procedural completion.

Figure 2 illustrates this iterative relationship between verification evidence, underlying assumptions, and programme-level decisions. Rather than converging on confidence through activity closure alone, programmes develop confidence by repeatedly reassessing what evidence means in the context of evolving architectural understanding and delivery pressure.

While Figure 2 explains how confidence develops over time, it does not explain why some evidence carries more weight than others at sign-off. That distinction arises from architectural context. At the system scale, not all behaviours contribute equally to decision confidence. Power state, security mode, software control, and domain sequencing determine which behaviours can be exercised together and which verification results remain valid as operating conditions change.

Figure 3 shows how architectural dependencies shape the limits of observable behaviour and, therefore, the strength of sign-off confidence. Power domains, security boundaries, and software-controlled state transitions constrain what can be verified concurrently and what must be inferred. These dependencies determine where confidence can be demonstrated directly and where it relies on assumptions that span multiple domains and ownership boundaries.

Structural Pressures Inside Delivery Organisations

As programmes mature, structural pressures increasingly shape technical decision-making. Schedule commitments harden, architectural flexibility reduces, and responsibility becomes distributed across multiple teams and suppliers. Each group optimises within its own scope, often with limited visibility of system-level consequences. Engineering teams generate verification evidence locally, while programme leadership establishes confidence at the system level.

Under these conditions, challenges to assumptions become progressively harder to raise. Programmes frequently reframe late-emerging concerns as residual risk rather than pursue architectural change. This is not a failure of engineering discipline, but a consequence of organisational structure interacting with technical complexity. Risk accumulates not through neglect, but because rising delivery pressure increases the cost of challenging foundational decisions.

Where Independent Perspective Changes Outcomes

Independent verification changes outcomes through disciplined judgment rather than altered execution. Independence enables unbiased examination of assumptions and system-level evaluation of evidence beyond local completion criteria. This perspective is particularly valuable where risk spans multiple domains or organisational boundaries.

By focusing on interactions, constraints, and decision points rather than artefacts and milestones, independent analysis helps expose where confidence is being inferred rather than demonstrated. The value lies in reframing questions: what must behave correctly together, under which conditions, and with what margin. In complex programmes, this shift in judgement often matters more than additional execution effort.

Verification as a Risk Lens, Not a Completion Activity

At the system scale, Verification delivers greatest value when programmes use it as a continuous risk-assessment lens, rather than as an activity that progresses towards closure. Coverage metrics, pass rates, and regression stability indicate progress, but, on their own, they do not describe exposure. The critical question is not whether verification is complete, but whether it illuminates the behaviours that constrain safe decision-making.

Viewing verification as a risk lens encourages earlier interrogation of assumptions, more precise articulation of evidence gaps, and more deliberate consideration of trade-offs. It supports informed decisions under uncertainty, rather than retrospective justification once options have narrowed. This reframing aligns the verification effort with programme intent rather than procedural completion.

Closing Perspective: Risk Control Is a System-Level Discipline

The accumulation of risk in large programmes is not a consequence of weak verification, immature tools, or insufficient effort. It reflects the realities of system-scale complexity, organisational structure, and human decision-making under pressure. Risk emerges where interactions are least visible, assumptions are most implicit, and consequences are most costly to revisit.

Controlling this risk requires treating verification as part of a broader system-level discipline, one that supports judgment as much as execution. Engineers must interpret evidence in context, challenge assumptions deliberately, and apply an explicit understanding of uncertainty to decision-making so that programmes can manage risk before it becomes irreversible. At system scale, programmes build confidence through sustained insight rather than through the closure of activities.

Independent Verification in System-Level Risk Control

Independent verification plays a critical role when programme risk emerges from architectural interactions, organisational boundaries, and decision constraints rather than isolated implementation defects. At system scale, confidence depends on understanding available evidence, recognising unobservable behaviour, and identifying where assumptions influence sign-off decisions.

Alpinum Consulting focuses on verification as a judgment and risk-visibility discipline, helping leadership teams assess system-level exposure, challenge embedded assumptions, and make informed decisions under uncertainty across complex SoC programmes.

Explore Alpinum’s approach to independent design verification:

https://alpinumconsulting.com/services/pre-silicon/verification/

Written by : Mike Bartley

Mike started in software testing in 1988 after completing a PhD in Math, moving to semiconductor Design Verification (DV) in 1994, verifying designs (on Silicon and FPGA) going into commercial and safety-related sectors such as mobile phones, automotive, comms, cloud/data servers, and Artificial Intelligence. Mike built and managed state-of-the-art DV teams inside several companies, specialising in CPU verification.

Mike founded and grew a DV services company to 450+ engineers globally, successfully delivering services and solutions to over 50+ clients.

Mike started Alpinum in April 2025 to deliver a range of start-of-the art industry solutions:

Alpinum AI provides tools and automations using Artificial Intelligence to help companies reduce development costs (by up to 90%!) Alpinum Services provides RTL to GDS VLSI services from nearshore and offshore centres in Vietnam, India, Egypt, Eastern Europe, Mexico and Costa Rica. Alpinum Consulting also provides strategic board level consultancy services, helping companies to grow. Alpinum training department provides self-paced, fully online training in System Verilog, UVM Introduction and Advanced, Formal Verification, DV methodologies for SV, UVM, VHDL and OSVVM and CPU/RISC-V. Alpinum Events organises a number of free-to-attend industry events

You can contact Mike (mike@alpinumconsulting.com or +44 7796 307958) or book a meeting with Mike using Calendly (https://calendly.com/mike-alpinumconsulting).

Stay Informed and Stay Ahead

Latest Articles, Guides and News

Explore related insights from Alpinum that dive deeper into design verification challenges, practical solutions, and expert perspectives from across the global engineering landscape.