Introduction

Chiplet-based architectures are rapidly becoming a dominant approach for scaling performance, flexibility, and cost efficiency in advanced silicon systems. By decomposing large monolithic designs into smaller, reusable dies, engineers can mix process nodes, integrate heterogeneous IP, and accelerate innovation across compute, memory, and specialised accelerators.

As chiplet adoption matures, however, a critical challenge is becoming increasingly visible. Verification complexity no longer resides primarily at the IP or die level. Instead, it emerges at the system level, where independently verified components interact in ways that are difficult to predict, observe, and validate using traditional approaches.

This shift has significant implications for how verification is planned, executed, and signed off. In chiplet-based systems, correct IP does not automatically result in correct silicon. Understanding why requires a closer examination of how integration complexity manifests in modern multi-die designs.

From IP Verification to System Behaviour

For decades, verification practice has been organised around a clear hierarchy. Individual IP blocks are validated in isolation and then integrated at the SoC level, where additional checks focus on connectivity, coherence, and top-level functionality. Once expected behaviours are demonstrated and regressions stabilise, designs progress toward tape-out.

Chiplet-based systems challenge this model. Each die may be developed by a different team, sourced from a different vendor, or optimised for a different process technology. While each component can be functionally correct within its own verification environment, system behaviour emerges only when these components interact.

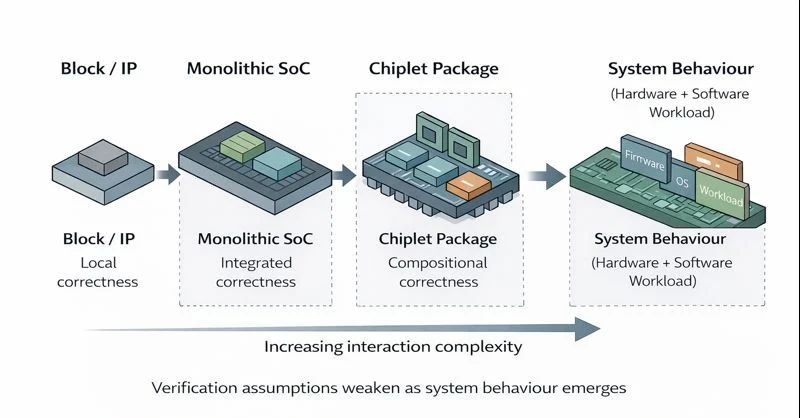

Figure 1: Evolution of verification scope from block-level validation to system-level behaviour as designs transition from monolithic SoCs to chiplet-based systems.

As the verification scope expands from individual blocks to composed systems, assumptions that hold at the IP and SoC levels increasingly break down under system-level interaction.

Timing relationships, protocol dependencies, power delivery effects, and software-driven workloads are often invisible during IP-level verification. These behaviours surface only when chiplets are composed into a complete system, frequently late in the development cycle. As a result, verification success cannot be defined solely by local correctness; it must also account for global interaction.

Emergent Failure Modes in Multi-Die Systems

One of the defining characteristics of system-level verification is the appearance of emergent failure modes. These failures do not originate from a single faulty block but arise from interactions across dies, interfaces, and operating conditions.

Common examples include:

- Cross-chiplet timing violations triggered by shared clocking or asynchronous boundaries

- Power and thermal coupling effects that alter behaviour under system workloads

- Protocol mismatches that remain dormant until specific traffic patterns occur

- Firmware–hardware interactions that expose corner cases unseen in simulation

Figure 2: Example illustrating how a localised defect or failure within one chiplet can propagate across die-to-die interfaces and become observable only at the system boundary. Source: KEYSIGHT

In multi-die systems, failures that appear contained or benign at the component level can surface only when system-level interactions and workloads are exercised.

In many cases, these issues only appear under realistic workloads or long-running system scenarios. Traditional verification environments, optimised for block-level exhaustiveness, are poorly suited to capturing this class of behaviour.

Limits of Block and Die-Level Closure

Coverage metrics, assertions, and constrained-random testing remain essential instruments for local validation. However, in chiplet-based systems, coverage closure is increasingly decoupled from confidence in overall system behaviour.

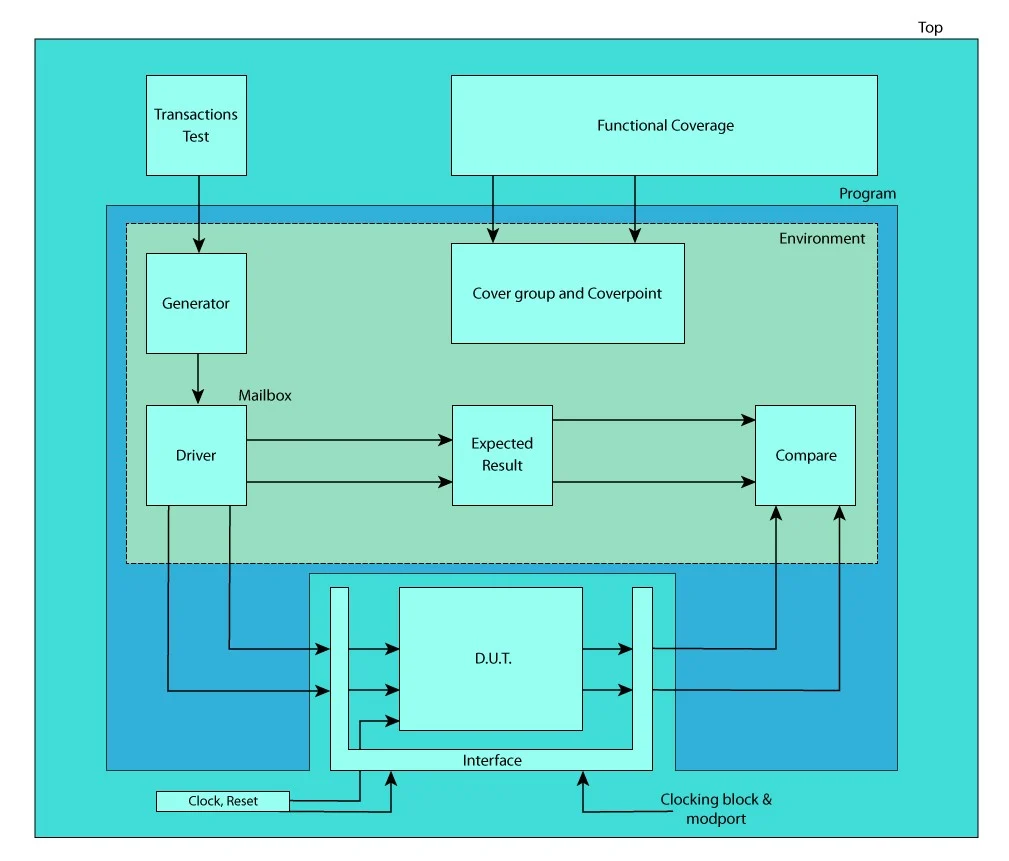

Figure 3: Conceptual illustration showing how functional coverage and scoreboard-based verification environments can reach closure without guaranteeing correct behaviour at the system level. Source: ALDEC

While coverage metrics indicate which scenarios have been exercised, they provide limited insight into whether complex system-level interactions behave correctly under realistic workloads.

Block-level validation typically assumes that correctness is compositional: if each part behaves correctly in isolation, the integrated system will behave correctly as a whole. In practice, this assumption breaks down when:

- Components make incompatible assumptions about ordering, latency, or error handling

- Die-level environments abstract away shared power, timing, and thermal constraints

- Validation scenarios fail to reflect realistic software-driven workloads

The result is a widening gap between verification effort and verification effectiveness. Systems may demonstrate strong local correctness while still harbouring latent integration defects that emerge only during system bring-up or extended operation.

System-Level Verification Techniques

Addressing these challenges requires approaches that operate at the system level rather than treating integration as a final validation step. Key techniques include:

- Cross-die protocol verification, focusing on end-to-end behaviour rather than interface compliance alone

- Power- and thermal-aware analysis that captures dynamic effects across operating conditions

- Workload-driven validation using realistic software and traffic patterns

- Hierarchical observability that maintains visibility across dies without intrusive instrumentation

These techniques do not replace established practices. Instead, they extend visibility into system interactions that would otherwise remain opaque.

Standards Enabling System Visibility

Industry standards play a critical role in enabling system-level verification. Without common access and observability mechanisms, integration complexity quickly becomes unmanageable.

- IEEE 1838 provides structured test access architectures for stacked and multi-die systems, enabling predictable entry points for validation and debug.

- UCIe establishes interoperable die-to-die communication, including support for metadata exchange that aids validation and monitoring.

- Open Compute Project (OCP) initiatives define open frameworks for system-in-package testing and cross-vendor interoperability.

Together, these standards create a foundation for system-level verification that scales across suppliers and integration models.

Where AI Supports Verification

Artificial intelligence is increasingly applied to verification workflows, particularly at the system level, where data volumes exceed the capacity of manual analysis.

Effective applications include:

- Clustering and classification of failure signatures across large regression sets

- Identification of coverage gaps correlated with system workloads

- Cross-domain correlation between RTL, firmware, and emulation results

AI does not replace engineering judgment. Its value lies in augmenting visibility and prioritisation, not in making verification decisions autonomously. Explainability and traceability remain essential, especially in safety- and reliability-critical designs.

Architectural Implications for Engineers

System-level verification cannot be retrofitted late in the design cycle. It must be considered during architecture definition, where decisions about partitioning, interfaces, and observability have long-term consequences.

Teams that plan for system-level verification early benefit from reduced bring-up time, faster root-cause identification, and greater confidence in integration sign-off. Conversely, architectures that prioritise performance or reuse without considering verification often incur hidden costs during integration and post-silicon debug.

Closing Perspective

Chiplet-based systems represent a fundamental shift in how silicon is designed and integrated. As architectures evolve, verification must evolve alongside them. The centre of gravity is moving away from isolated component correctness toward system-level behaviour and interaction.

For engineers, this shift demands renewed attention to observability, standards adoption, and verification strategy. In the chiplet era, understanding where integration complexity truly emerges is essential to delivering reliable, scalable silicon systems.

For organisations addressing system-level verification challenges in complex, multi-die programmes, Alpinum Consulting provides independent technical insight and risk-focused engineering support.

https://alpinumconsulting.com/contact-us/

References

- IEEE Standards Association, IEEE 1838™ – Standard for Test Access Architecture for Three-Dimensional Stacked Integrated Circuits, IEEE, 2021.

https://semiengineering.com/knowledge_centers/standards-laws/standards/ieee-1838/

- Universal Chiplet Interconnect Express (UCIe) Consortium, UCIe Specification Overview, 2023.

https://www.uciexpress.org - Open Compute Project (OCP), Universal Die-to-Die (D2D) Link Layer Documentation, Open Compute Project Foundation.

https://www.opencompute.org - EE Times, A Holistic Approach to System-Level Design and Verification Success, 2006.

https://www.eetimes.com - SemiEngineering, A Balanced Approach to Verification, 2024.

https://semiengineering.com

Written by : Mike Bartley

Mike started in software testing in 1988 after completing a PhD in Math, moving to semiconductor Design Verification (DV) in 1994, verifying designs (on Silicon and FPGA) going into commercial and safety-related sectors such as mobile phones, automotive, comms, cloud/data servers, and Artificial Intelligence. Mike built and managed state-of-the-art DV teams inside several companies, specialising in CPU verification.

Mike founded and grew a DV services company to 450+ engineers globally, successfully delivering services and solutions to over 50+ clients.

Mike started Alpinum in April 2025 to deliver a range of start-of-the art industry solutions:

Alpinum AI provides tools and automations using Artificial Intelligence to help companies reduce development costs (by up to 90%!) Alpinum Services provides RTL to GDS VLSI services from nearshore and offshore centres in Vietnam, India, Egypt, Eastern Europe, Mexico and Costa Rica. Alpinum Consulting also provides strategic board level consultancy services, helping companies to grow. Alpinum training department provides self-paced, fully online training in System Verilog, UVM Introduction and Advanced, Formal Verification, DV methodologies for SV, UVM, VHDL and OSVVM and CPU/RISC-V. Alpinum Events organises a number of free-to-attend industry events

You can contact Mike (mike@alpinumconsulting.com or +44 7796 307958) or book a meeting with Mike using Calendly (https://calendly.com/mike-alpinumconsulting).

Stay Informed and Stay Ahead

Latest Articles, Guides and News

Explore related insights from Alpinum that dive deeper into design verification challenges, practical solutions, and expert perspectives from across the global engineering landscape.