Introduction: Separating promise from deployable capability

Quantum computing occupies a prominent place in discussions of industrial technology, yet its practical implications are often misunderstood. Public narratives frequently conflate algorithmic demonstrations, laboratory benchmarks, and vendor roadmaps with deployable industrial capability. For most industrial organisations, these distinctions matter more than raw theoretical potential.

Many quantum demonstrations show that specific problems can be formulated and solved under controlled conditions. Fewer demonstrate that those solutions can be reproduced reliably, integrated into existing workflows, or maintained over operational lifetimes. The gap between experimental success and industrial usability remains substantial.

From an engineering perspective, quantum computing should be framed as a long-horizon capability with selective near-term use. Its relevance today lies less in replacing classical computing and more in understanding where limited advantage may emerge, how that advantage is accessed, and what constraints govern its integration into real systems. A separate section at the end lists the conferences and forums referenced in this article, together with dates and locations for context.

What industrial users actually require

Industrial computing environments prioritise reliability, repeatability, and integration over peak performance. Outputs must be reproducible across runs, explainable within system context, and traceable through engineering workflows. These requirements differ sharply from those in research settings, where probabilistic outcomes and exploratory results are acceptable.

Quantum systems challenge these expectations. Many quantum algorithms offer probabilistic advantage rather than deterministic outputs. While statistical improvements may be valuable in some contexts, they complicate validation, acceptance testing, and certification, particularly in regulated or safety-critical industries.

Cost and operational constraints further shape adoption. Access to quantum hardware is limited, often mediated through cloud platforms, and sensitive to environmental conditions. Latency, scheduling, and availability all affect whether quantum resources can be meaningfully incorporated into industrial processes. These factors define the boundary between experimental exploration and deployable use.

Near-term industrial application classes

Optimisation and scheduling problems

Optimisation is frequently cited as a near-term quantum application, particularly for logistics, scheduling, and resource allocation. In practice, most industrial benefits today come from quantum-inspired algorithms and hybrid approaches that predominantly run on classical hardware.

Noise, limited qubit counts, and error rates constrain the scale of problems that can be addressed directly on quantum devices. As a result, quantum components often serve as exploratory tools rather than production engines. Classical heuristics and approximation methods continue to dominate operational deployment.

The industrial value lies in understanding problem structure, benchmarking alternative approaches, and assessing whether future quantum scaling could justify deeper integration. Treating quantum optimisation as a drop-in replacement for classical solvers is rarely justified.

Materials, chemistry, and simulation

Quantum computing shows promise in materials science and chemistry, where quantum behaviour is intrinsic to the problems being studied. Early value is generated through problem exploration, hypothesis testing, and model validation rather than routine production simulation.

Current workflows rely heavily on classical pre-processing to frame problems and post-processing to interpret results. Quantum computation occupies a narrow segment of a much larger pipeline. The sensitivity of results to noise and model assumptions further limits standalone use.

For industrial users, quantum simulation today is best viewed as an adjunct to established modelling techniques, offering insight rather than replacement. The engineering challenge lies in integrating these insights into existing design and verification processes.

Cryptography and security (longer horizon)

Quantum computing’s impact on cryptography is widely discussed, but its industrial implications are longer-term. The primary concern is not immediate deployment of quantum cryptography, but preparation for a transition to quantum-resilient security.

The industrial focus is therefore on migration planning, algorithm agility, and lifecycle management rather than on replacing existing systems. The emphasis is on ensuring that infrastructure deployed today can adapt as cryptographic standards evolve.

This preparation work is architectural rather than computational. It concerns system design choices, update mechanisms, and long-term risk management more than near-term quantum advantage.

Hybrid quantum–classical systems

In practical industrial settings, quantum systems operate as accelerators within predominantly classical workflows. Computation management, control, data movement, and result interpretation remain classical responsibilities. Quantum resources are invoked selectively, and any benefit is constrained by scale, noise, and integration overheads.

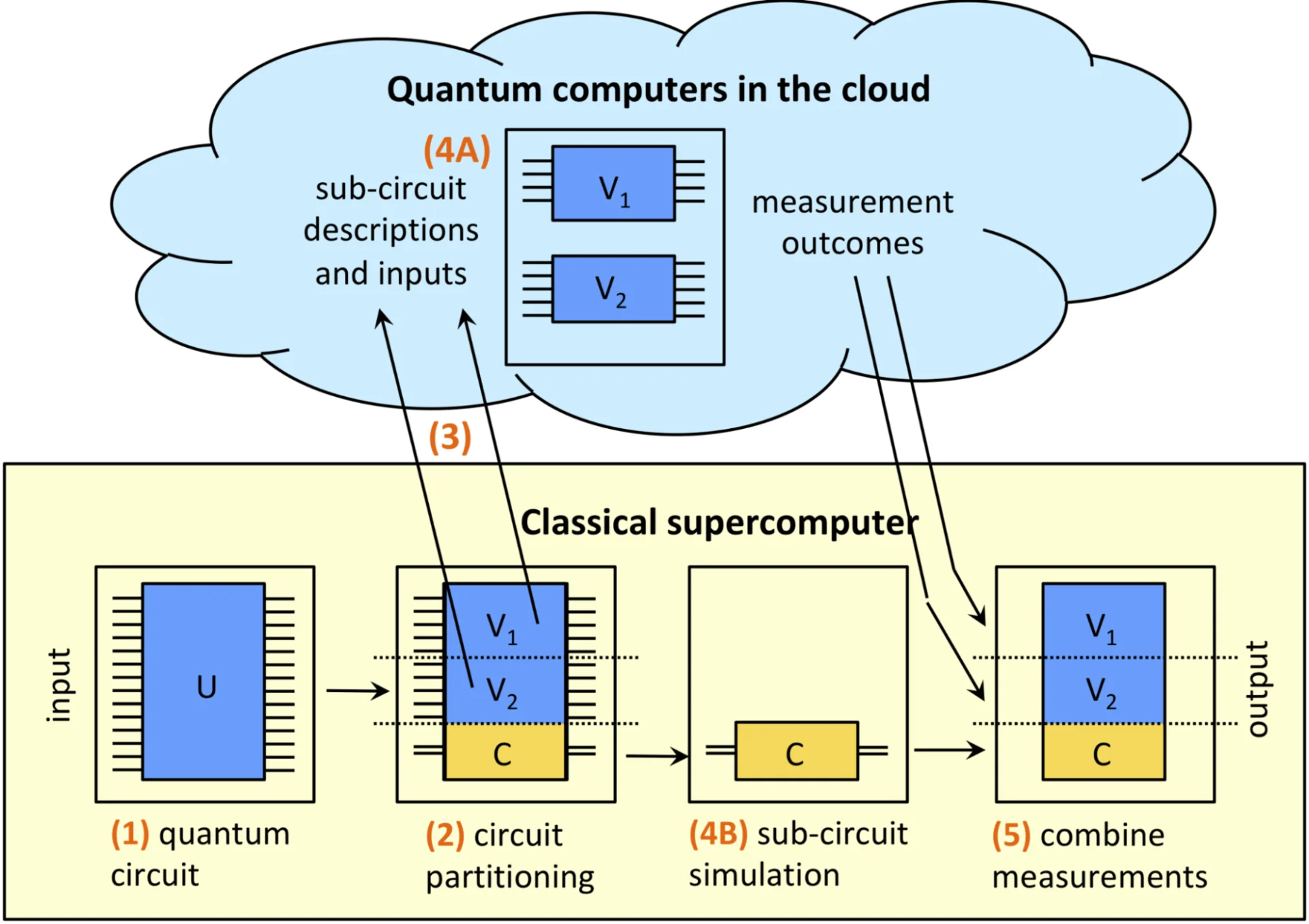

Figure 1: Hybrid quantum–classical execution model. Source: epiqc.cs.uchicago.edu

Conceptual view of a hybrid quantum–classical workflow in which classical systems manage circuit decomposition, orchestration, and result aggregation while quantum hardware executes constrained sub-circuits. The model reflects current industrial practice rather than a complete software or control stack.

Figure 1 illustrates the execution structure that dominates present-day industrial quantum use. Quantum processors do not operate as independent platforms, but as tightly controlled execution resources embedded within classical systems. Decomposition, scheduling, and interpretation occur outside the quantum device, reflecting practical limits on reliability, verification, and operational control.

This model aligns naturally with existing high-performance computing and cloud infrastructures. Integration challenges are comparable to those seen with other specialised accelerators, including scheduling complexity, data transfer overheads, and software orchestration maturity.

Industry discussions increasingly frame quantum computing in this hybrid context, including at forums such as IEEE Quantum Week, where attention is placed on system integration, tooling, and deployment realism rather than standalone performance claims.

Engineering constraints holding back deployment

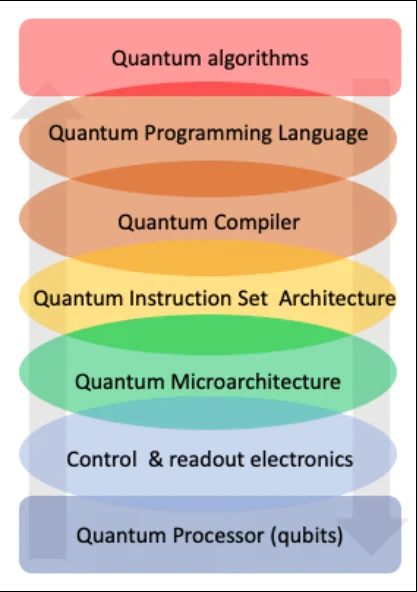

Several engineering constraints continue to limit industrial deployment. Figure 2 places these constraints in context by showing the layered quantum computing stack, from algorithms and programming abstractions down to control electronics and physical qubits. Limits on qubit stability, error-correction overheads, and system scaling place strict bounds on usable circuit depth and problem size. Improvements in hardware capability continue, but progress remains incremental rather than step-changing.

Figure 2: Quantum computing system stack and control layers. Source: 10.48550/arXiv.2204.06369.

Layered view of a quantum computing system, showing application software, compilation and scheduling, control electronics, and physical qubit hardware. The diagram highlights how capability, reliability, and verification challenges emerge across interfaces rather than solely from hardware limitations.

Toolchain limitations compound these constraints across the stack shown in Figure 2. Programming models, debugging support, and verification frameworks lack the maturity typically required in industrial engineering environments. Limited observability across software, control, and hardware layers makes fault diagnosis, performance analysis, and reproducibility difficult at the system level.

Operational complexity introduces additional friction. Quantum systems are sensitive to environmental conditions, rely on specialised control electronics and infrastructure, and require expertise that is not widely available within most industrial organisations. As the figure suggests, these challenges propagate across multiple layers, increasing integration effort and elevating programme risk during deployment and operation.

Verification, confidence, and decision risk

Verifying quantum computation at the system level presents unique challenges. Probabilistic outputs complicate result validation, while limited observability makes root-cause analysis difficult. Confidence is often statistical rather than deterministic.

For regulated or safety-critical industries, these characteristics raise significant concerns. Trust, reproducibility, and auditability are foundational requirements that quantum systems currently struggle to meet without extensive supporting infrastructure.

As a result, quantum adoption is less a technical decision than a risk management exercise. Organisations must weigh potential advantage against verification burden and decision risk, particularly where system failure has serious consequences.

What industrial progress actually looks like

Industrial progress in quantum computing is incremental. It involves skill development, workflow experimentation, and architectural readiness rather than sudden disruption. Organisations that benefit most treat quantum capability as something to be managed and evaluated over time.

Industry-facing forums such as Q2B provide useful grounding by focusing on deployment realities, integration challenges, and commercial constraints. These discussions reinforce that progress depends on disciplined engineering rather than headline claims.

The emphasis is on learning where quantum fits, where it does not, and how systems can evolve without over-committing to immature capability.

Conclusion: Industrial quantum is a capability to manage, not a shortcut to advantage

Quantum computing has long-term potential, but its industrial value today lies in careful integration and effective risk management. Timelines remain uncertain, and capability is constrained by engineering realities rather than theoretical possibilities.

Value emerges when quantum systems are treated as one component within a broader architecture, supported by classical computation, robust workflows, and clear verification strategies. Disciplined integration matters more than early adoption.

For industrial organisations, quantum computing is best approached as a risk-managed engineering journey. Advantage comes not from claims of disruption, but from understanding constraints, preparing systems, and making informed decisions as capability evolves.

Related Industry Conferences and Forums

The following conferences and forums reflect where industrial quantum computing capability, constraints, and system-level integration are actively discussed in 2026.

13–18 September 2026, Toronto, Ontario, Canada

Technical forum focused on quantum hardware, software, and system-level integration, with attention to verification, hybrid workflows, and practical deployment constraints.

- Q2B

4–5 June 2026, Tokyo, Japan

Industry forum addressing commercial deployment, hybrid quantum–classical workflows, and adoption constraints. - International Conference on Quantum Communications, Networking, and Computing (QCNC 2026)

6–8 April 2026, Kobe, Japan

Technical and engineering topics spanning quantum communication infrastructure, networking, and computing. - Global Summit on Quantum Computing – Quantum Meet 2026

19–21 March 2026, Barcelona, Spain

Summit covering quantum algorithms, cryptography, hardware, and emerging technologies in computing. - Quantum World Congress 2026

22–24 September 2026, College Park, Maryland, USA

Global industry event focused on quantum discovery, innovation, and commercialisation pathways. - APS March Meeting 2026

15–20 March 2026, Denver, Colorado, USA

Broad physics and quantum science meeting with significant engineering and industrial relevance. - IEEE qCCL 2026 (Quantum Control, Computing, and Learning)

1–3 July 2026, Aalborg, Denmark

Conference at the intersection of quantum computing, control theory, and machine learning.

Continue Exploring

If you would like to explore more work in this area, see the related articles in the Quantum section on the Alpinum website:

👉 https://alpinumconsulting.com/resources/blogs/quantum/

For discussion, collaboration, or technical engagement, contact Alpinum Consulting here:

👉 https://alpinumconsulting.com/contact-us/

Written by : Mike Bartley

Mike started in software testing in 1988 after completing a PhD in Math, moving to semiconductor Design Verification (DV) in 1994, verifying designs (on Silicon and FPGA) going into commercial and safety-related sectors such as mobile phones, automotive, comms, cloud/data servers, and Artificial Intelligence. Mike built and managed state-of-the-art DV teams inside several companies, specialising in CPU verification.

Mike founded and grew a DV services company to 450+ engineers globally, successfully delivering services and solutions to over 50+ clients.

Mike started Alpinum in April 2025 to deliver a range of start-of-the art industry solutions:

Alpinum AI provides tools and automations using Artificial Intelligence to help companies reduce development costs (by up to 90%!) Alpinum Services provides RTL to GDS VLSI services from nearshore and offshore centres in Vietnam, India, Egypt, Eastern Europe, Mexico and Costa Rica. Alpinum Consulting also provides strategic board level consultancy services, helping companies to grow. Alpinum training department provides self-paced, fully online training in System Verilog, UVM Introduction and Advanced, Formal Verification, DV methodologies for SV, UVM, VHDL and OSVVM and CPU/RISC-V. Alpinum Events organises a number of free-to-attend industry events

You can contact Mike (mike@alpinumconsulting.com or +44 7796 307958) or book a meeting with Mike using Calendly (https://calendly.com/mike-alpinumconsulting).

Stay Informed and Stay Ahead

Latest Articles, Guides and News

Explore related insights from Alpinum that dive deeper into design verification challenges, practical solutions, and expert perspectives from across the global engineering landscape.